Home Cloud Server

Table of Contents

- Home Cloud Server

- Table of Contents

- Introduction

- Past Configurations and Insperations

- Current Configurations

- Future Upgradability

Introduction

First off most of this project will be documented in this README markdown along with the history of the project, and much of the troubleshooting done to get the server to where it is now.

Old versions of this document are available in the Old Versions 📁 folder.

Past Configurations and Insperations

To begin with this project started for me in 2017 when I first heard about FreeNAS through learning how to build computers on YouTube. Like most who are uneducated about the server space I thought it was an extremely complicated mechanism that would be extremely difficult to understand. Now I know that a server is just a regular computer with different hardware requirements running a different Operating System. So began my first FreeNAS server on an old laptop with a single 160 GB Hard Drive and a bootable USB. This system worked for what it was but was limited by the single 100 mbps ethernet port on the old laptop and was only used for archival data.

It would eventually be upgraded to a 1TB Hard Drive and a 250 GB Boot SSD that would allow for more features to be used in newly released TrueNAS Core such as a plex media server. However, without any hardware redundancy and a cheap drive this NAS' storage drive would eventually become corrupted and all the data on it lost.

v1.0

With the loss of data in mind as well as possible virtualization it was important to start with system that would not be easily corrupted. This means some sort of hardware redundancy, some of the options I considered were Raid 1, Raid Z and Raid Z2. Eventually I settled on using 3 4 Tb drives in Raid Z1 which would allow for one drive to fail completely without any data loss.

When it comes to the other hardware I just tried to reuse as much previous hardware as I could. This resulted in using a Ryzen 7 2700x with 32GB of ECC RAM.

Current Configurations

There are two servers in the 'datacenter'.

TrueNAS

After using the v1.0 configuration for 2 years it was clear that some improvements could be made to help with scalability, usability and reliability. To assist in this the server was upgraded to AMD Epyc running a 7551P processor with 128GB of RAM. The storage configuration stayed the same reusing the old 2 x 3 wide RAIDZ1 x 4TB drives and adding 2 more VDEVs to increase the storage space. The increased memory would allow the server to have 128TB of space using the ZFS recommended 1GB of RAM per TB of storage and until that amount of storage was added the RAM could be used for virtualization which will be discussed more in detail in a later section.

Other than the aforementioned 12 drive HDD array there is a 2 250GB SSD mirror for boot and a 3 250GB ssd RaidZ1 for Virtual Machine ZVol storage and for docker storage. There is also a single Kioxia CD6-R 3.84TB drive without any redundancy which is used by a LanCache VM for the cache storage.

This server runs TrueNAS Scale ElectricEel (TrueNAS-24.10.2.2).

Connectivity wise this server has an Nvidia P2000 for plex media encoding, an LSI 9207-8I along with the 8 onboard SATA to connect 16 drives as well as an LSI 9200-8e connected to a 45 Bay Supermicro JBOD I got off Ebay. There is also a Mellanox ConnectX-3 CX354A Dual 40GbE QSFP+ network card.

Proxmox

After using Proxmox 7 in a virtual machine in the original v1.0 configuration I decided to use the old components from v1.0 and run Proxmox 8.4 on bare metal. The server reused the Ryzen 7 2700x with 32GB of ECC RAM and added 2 500GB SSDs as a mirrored boot device. Proxmox allows us to use this storage for VMs and LXCs so this was enough for me presently. This server also has a dual port 10G NIC that is link aggregated for 20G using LACP.

This proxmox server runs multiple Virtual Machines and Linux Containers, the virtual machines include a Windows MineCraft Server VM that manages backups, server restarts and updates. The Linux Containers (LXCs) run some docker based game servers such as CS:2 and Garry's Mod and more importantly host multiple services that I have created, for more information visit my Portfolio Site 💼. The LXCs that are associated with websites also run self-hosted GitHub action runners that allow for Continuous Deployment changes to be made when a new docker image of the site is available.

Networking

When it comes to networking the setup is not too advanced, I have a Ubiquiti Dream Machine Pro Max (UDM-Pro-Max) that handles routing and the subnet that is setup for the data center as well as setting clients to use the local DNS. There is also a Brocade ICX6610-48-E that is connected to the UDM at 10G using a direct attach cable that I got from FS.com. The Brocade switch has all the full licenses unlocked following a guide from Fohdeesha. Due to the licenses the switch has 48 1G RJ45 ports, 8 10G SFP+ ports, 8 10G SFP+ ports that come from two 40G QSFP+ to 4x10G DAC cables and 2 other 40G QSFP+ ports. Making this switch extremely versatile and cost efficient.

These devices have allowed me to connect the servers at extremely high speeds and ensure that they can communicate quickly with each other as well as clients on and off the local network.

HAProxy Load Blancer

To manage inbound connections requesting different websites that run on the local network I have a HAProxy Load Balancer that takes in requests over port 443 and routes them to the correct local webserver and encrypts traffic using SSL through Cloudflare or LetsEncrypt depending on the service's needs.

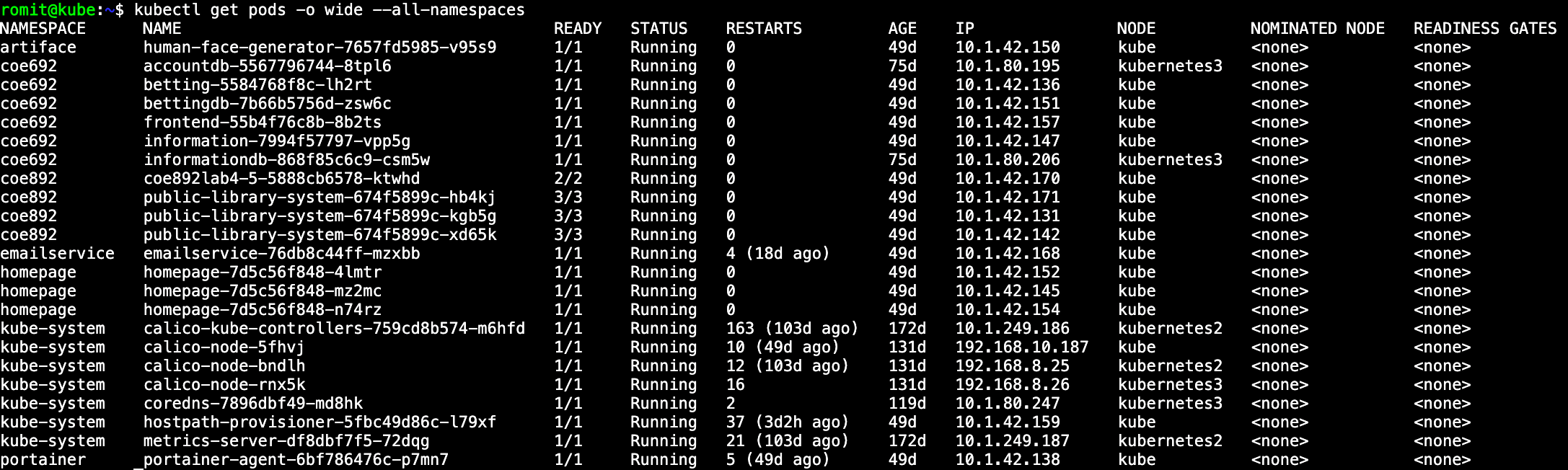

Kubernetes

Spread across the two servers are 3 MicroK8s Kubernetes Nodes with the primary node on a Virtual Machine on the TrueNAS server due to the large amount of RAM and 2 on the Proxmox server that function as backups for if something goes wrong with the primary node.

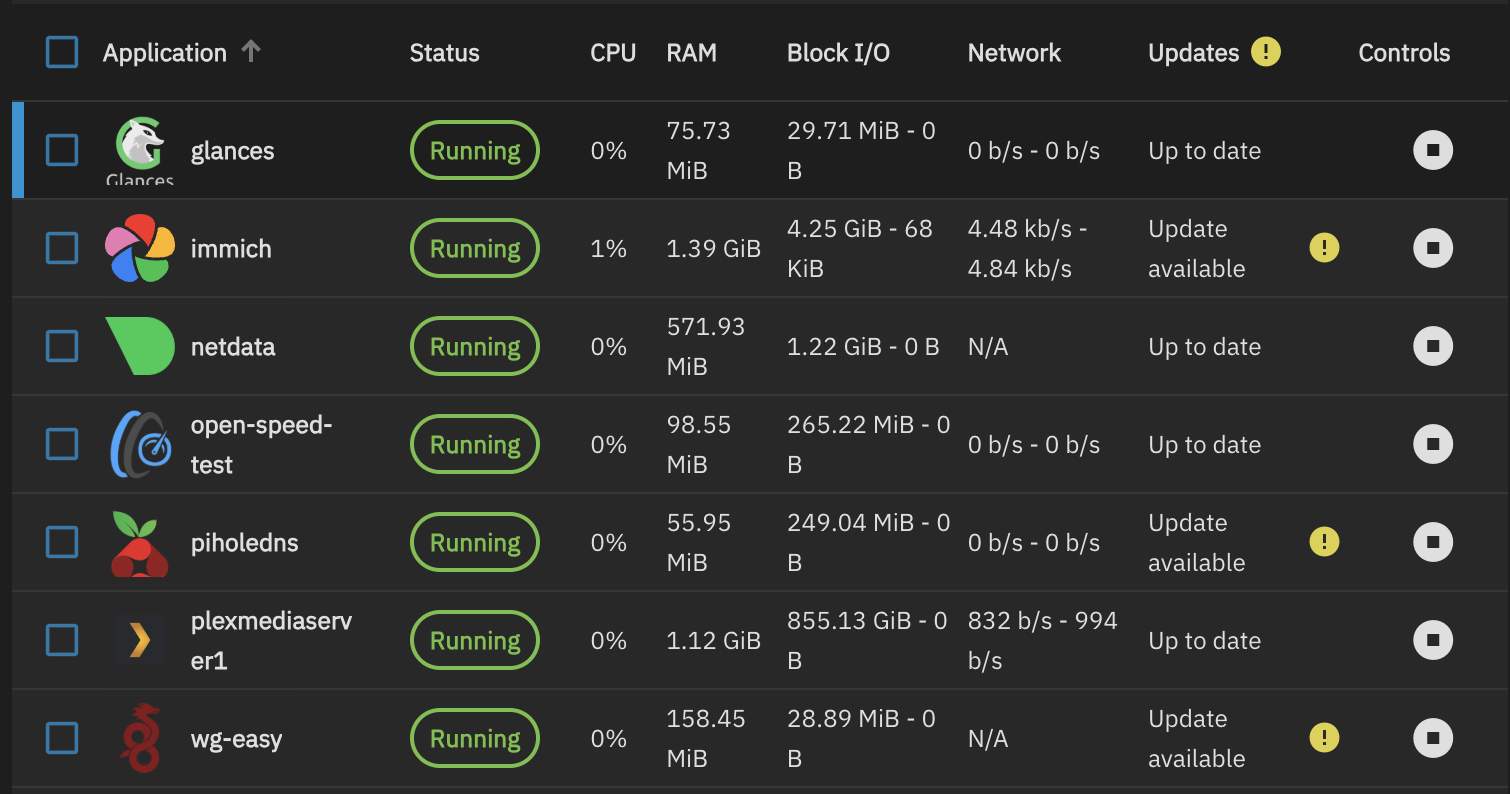

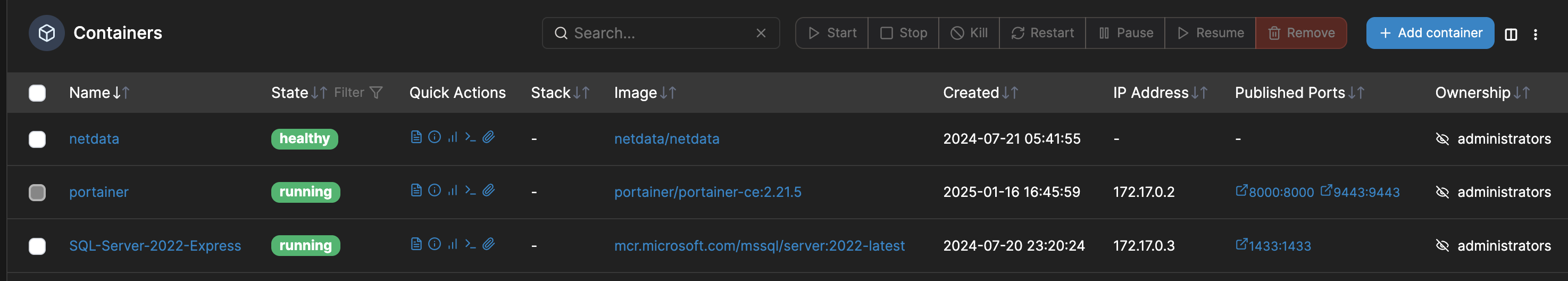

Docker

There are docker containers that run on both servers as shown in the images below using the TrueNAS GUI and Portainer for the Proxmox server. For example, the TrueNAS server runs things like Plex Media Server and Immich which take up a lot of storage space for data and the Proxmox server hosts a Microsoft SQL Server 2022 Express container.

TrueNAS Server:

Proxmox Server:

DNS (LanCache and PiHole)

I have LanCache and PiHole instances that run off the TrueNAS server, the PiHole DNS server runs on docker and the LanCache DNS runs off a VM that also has a Kioxia CD6-R 3.84TB drive to use for caching data.

To better visualize this data I have modified an existing LanCache UI project and made changes to include more useful features for myself. Details are available here.

Custom Mail Server (MailCow)

I also have a custom mail server that runs in a virtual machine on the TrueNAS server. This mail server uses MailCow: Dockerized which uses docker and docker compose to run the services required to host the mail server including sending and receiving emails. To get around residential internet restrictions where port 26 is blocked for SMTP I have a Gmail Catchall that I use to receive emails and then send to the required local mailboxes as well as a Brevo relay sender where outbound emails are sent to Brevo to then be forwarded to recipients.

This is what I have added just before the # DO NOT EDIT ANYTHING BELOW # section in mailcow-dockerized/data/conf/postfix/main.cf:

smtp_sasl_auth_enable = yes

smtp_sasl_password_maps = hash:/opt/postfix/conf/sasl_passwd

smtp_sasl_security_options = noanonymous

smtp_sasl_mechanism_filter = plain, login

relayhost = [smtp-relay.brevo.com]:587

I also made a sasl_passwd file and the required sasl_passwd.db file as a result.

For the CatchAll I have Cloudflare set to forward any inbound email to my catchall gmail inbox. Then using getmail I get the new mail from the gmail inbox and forward it to the mailcow inboxes using the header:To: envelope.

~/.config/getmail/getmailrc:

[retriever]

type = MultidropPOP3SSLRetriever

server = pop.gmail.com

port = 995

username = gmail_email

password = gmail_app_password

# Gmail doesn’t give the real envelope; your best fallback is the visible To:

envelope_recipient = header:To:

[destination]

# Two good choices – pick ONE and comment out the other

# 1) Direct LMTP into Mailcow (dovecot listens on port 24 internally)

type = MDA_lmtp

host = 127.0.0.1

port = 24 # default inside the mailcow network

# 2) Or call dovecot-lda – works even if LMTP is unreachable from the host

# type = MDA_external

# path = /usr/lib/dovecot/deliver

# user = dovecot

# arguments = ("-d", "%T")

[options]

delete = true # remove mail from Gmail once handed off

read_all = false # only new mail

verbose = 1 # set 2 while testing

/etc/systemd/system/getmail.service:

# /etc/systemd/system/getmail.service

[Unit]

Description=Getmail (IMAP‑IDLE) for Gmail catch‑all → Mailcow

After=network-online.target dovecot.service

Wants=network-online.target

[Service]

Type=simple

User=mail

Group=mail

# ONE unbroken ExecStart= line (wrap only with backslashes)

ExecStart=/usr/bin/getmail --getmaildir /etc/getmail --rcfile /etc/getmail/catchall.conf --idle=INBOX

Restart=on-failure

RestartSec=10s

[Install]

WantedBy=multi-user.target

Future Upgradability

The next likely upgrades are to add more drives to the TrueNAS server using the JBOD as well as adding more nodes to the Proxmox server to allow for high availability of Virtual Machines and Linux Containers.